It’s November 2021, 5 years after my initial commit in, and the implicit public release of a tiny library called turbo.js. It was a trivial proof of concept, GPGPU in the browser, yet it became by far my most popular project.

This post (retrospective?) is a tale of the importance of communication: a simple idea, conveyed correctly, can inspire a lot of people to take it much further!

As an internet historian, or at least someone functioning in that role, wrote in 2019, the approach I took with turbo was nothing new:

But the dreams for GPGPU acceleration on JavaScript were not yet dead. Like the early native GPGPU pioneers of yore, who wrapped their precious data as textures, and transpiled their algorithms into OpenGL shaders, so did the new pathfinders of the JavaScript era. First as proof of concept designs, JavaScript libraries appeared that added third party GPGPU functionality on JavaScript. Today some of them have evolved into fully functional solutions, like gpu.js or turbo.js. These solutions will do all the hard work of preparing your data into something you can directly feed into your browsers graphics pipeline, and just like magic you will get a lovely batch of GPGPU computed fresh data. But not all that glitters is gold unfortunately.

– Athanasios Margaritis (emphasis mine)

I do dispute the “fully functional” part here. In stark contrast to gpu.js, turbo.js was never meant to be a production-ready library. It was a straight port of an earlier attempt written in C++ that I called NanoCL. Implementing this naive version of GPGPU is a good “hello, world” exercise for any graphics programmer, but as Athanasios’ last sentence implies, this was a bad idea for production use cases even in 2016. Read more about that in his article linked above.

Undeterred by lofty notions such as “best practices” or “usefulness” I ventured on to prove, in the best way I could, that GPGPU is possible in browsers today! Or, back then.

I whipped up a benchmark. I thought to myself: big numbers always sell. How can I build a good marketing strategy for this? This might seem like an odd thing to think about for a JS library, but my main goal with these sorts of side projects is to get other people excited about the underlying idea. For me, this is the best outcome and has one major benefit: I’m not left with a growing maintenance burden over time.

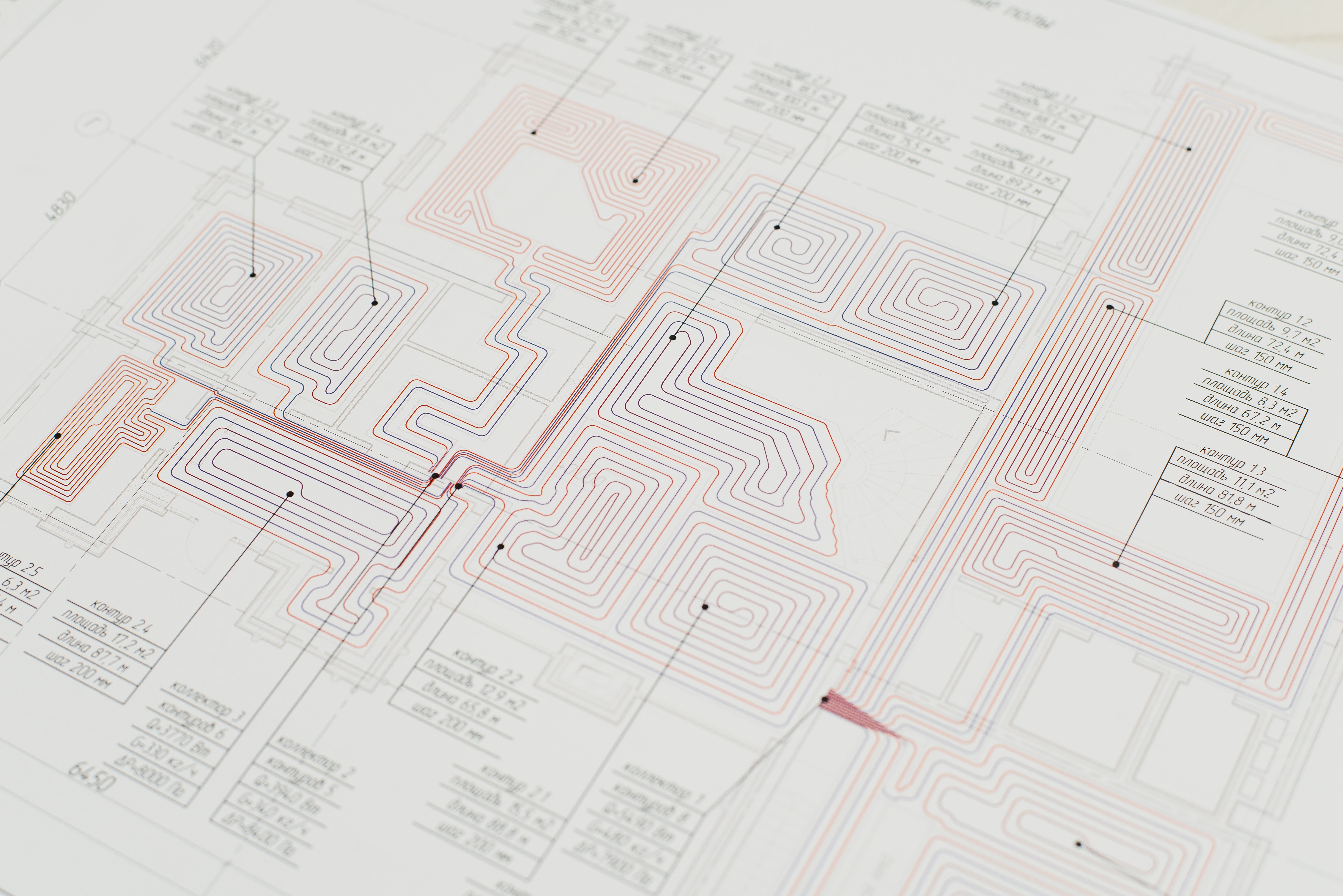

The benchmark is explained in the library’s README here. It would compute a fractal function (adapted from a simple Mandelbrot) for a large set of random coordinates.

Next, I set out to build a smart landing page, which you can still see at turbo.js.org (or not, depending on how much link rot has progressed when you’re reading this). The site runs the benchmark on load, and presents a nice shiny graph comparing the 1:1 JS and GLSL versions’ performance:

Along with lots of encouraging language for beginners. I wanted to avoid a scenario where folks new to GPU programming would be turned off by having to learn C (GLSL), instead framing this as “just another piece of code to write”. I hoped this would help build momentum.

And build momentum it did. Today, turbo.js has amassed more than 2,000 stars (for what that’s worth). But much more importantly, it spawned great discussions, on the usual suspects of forums and beyond. Oddly, it’s been referenced in research, with 10.1109/MCSE.2018.108163116 going far beyond the call of duty to find benefits of turbo.js’ rudimentary approach when compared to proper libraries such as gpu.js:

More recently, libraries such as WebMonkeys and turbo.js have been developed independently. The most notable difference between these libraries and gpu.js is that these libraries have a more simplified approach towards GLSL code generation. In some ways, this is an advantage over the gpu.js approach. As the source language of the compute kernels provided by the user is already in GLSL, there is very little preparation required before using the WebGL API. At the same time, it also gives the programmer powerful low-level access to the capabilities of the GPU, allowing them to fine-tune performance to an even greater degree. However, there are some trade-offs to consider when using GLSL as the source language. GLSL has a different syntax from JavaScript and has features specifically meant for graphics computation. GLSL is not a native language with first class support in JavaScript; any GLSL code must be written either as a string literal or, stored in external places such as the DOM or separate files. More importantly, the target language of the libraries are practically locked to GLSL, which would mean that they cannot run the compute kernels on the CPU as plain JavaScript, or migrate the backend to a different API as easily as gpu.js.

More time probably went into the paragraph above than into the 200 LOC that make up turbo.js! Others were a bit more realistic:

[a downside of] Turbo.js is the inability to run on a big number of android devices, since it requires the

OES_texture_floatWebGL v1 extension that is lacking in older generation Adreno GPUs, and almost all Mali GPU’s. Together with the fact that is offers no automatic CPU fall- back, this makes Turbo.js a very bad candidate when it comes to platform compatibility and inter- operability.

I’m actually rather glad this hacky demo didn’t get used for “HIGH-PERFORMANCE DATA VISUALIZATION IN MEDICAL AND OTHER APPLICATIONS”.

I was especially honored when turbo.js was mention in Seth Samuel’s talk track on Arbitrary Computation on the GPU Using WebGL (slides):

I saw this streamed by German broadcaster BR_NEXT from JS Kongress Munich and had a good chuckle when I heard him say “is the the author of turbo.js in the audience”. I was visiting my parents at the time and showed my dad, and to my amusement his immediate response was “so how much money are you making off of this?”.

Having had the difficult talk every father and son should have - the one about the profitability of open-source projects - I turned my attention to other side projects and quickly forgot about turbo.js altogether.

Now, I’ve come full circle and joined GitHub. Here we use our actual handles as account names, so turns out picking “turbo” at the time really paid off in the end.

I’ve since archived the repo, but the name lives on.